ARIES: ARt Image Exploration Space

ARIES is a digital initiative begun by members of the Digital Art History Lab (DAHL) in concert with teams from New York University’s Tandon School of Engineering and the Universidade Federal Fluminense, Brazil. It stems from our frustration regarding the inability of currently available software to manipulate images in a way that was truly intuitive and useful for art historians. Traditionally, art historians have used light boxes or tables on which they placed slides or other reproductive images. In the physical world, they were able to move these around at will, organizing and reorganizing images as desired. In this way, images from multiple sources were brought together and compared to identify similarities, differences, stylistic links, and relationships for further research. The transition from analog photographs and transparencies to digital image files has rendered this workflow obsolescent, yet art historians still lack well-designed, unified computational tools that are able to replace what can be done in the analog world.

The system we have designed, which is now in beta version, is called ARIES for ARt Image Exploration Space. ARIES is an interactive image manipulation system that allows for the exploration and organization of fine art images (of paintings, drawings, prints, sculpture, etc.) taken from multiple sources (e.g. websites, digital photographs, scans) in a virtual space. ARIES provides a novel, intuitive interface to explore, annotate, rearrange, and group art images freely in a single workspace environment, using organizational ontologies (collections, etc.) drawn from existing best practices in art history. The system allows for multiple ways to compare images, from using dynamic overlays analogous to a physical light box to advanced image analysis and feature–matching functions available only through computational image processing. Additionally, users may import and export data to and from ARIES.

ARIES has been developed by a multidisciplinary team including Claudio Silva and Juliana Freire, professors in the Department of Computer Science and Engineering at NYU Tandon and the directors/faculty members of the VIDA Center; Lhaylla Crissaff and Marcos Lage, visiting research scholars at NYU Tandon and professors at Universidade Federal Fluminense, Brazil; Joao Rulff, Research Associate at NYU Tandon; and Louisa Wood Ruby and Samantha Deutch, the Frick Art Research Library’s Head of Research and Assistant Director of the Center for the History of Collecting, respectively. We are grateful to an anonymous donor for their support of this project.

Try ARIES, where you can see videos and create your own account.

NYU Press Release(link is external)

Mapping the Frick Photograph Campaigns, 1922–1967

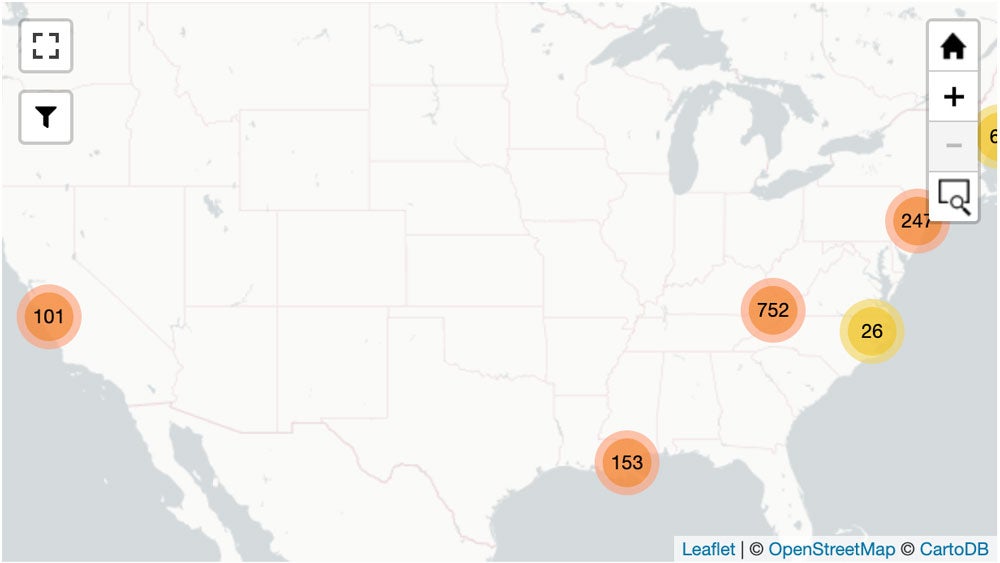

The Digital Art History Lab and the Center for Advanced Research of Spatial Information(link is external) at Hunter College, CUNY, are collaborating on an online, interactive tool that uses Geographic Information Systems (GIS) technologies to document the movement of Frick Art Research Library photographers across the United States as they recorded paintings and sculptures in private collections and little-known public collections. Several such expeditions were completed between 1922 and 1967. The resulting 35,000 negatives from these photograph campaigns, all of which have been digitized, are one of the most valuable resources in the Photoarchive, documenting many objects that either remain inaccessible to the general public or have been lost, destroyed, or altered in the intervening decades.

The first phase of the development of this tool has been completed and ten of these expeditions have been mapped. Please visit the Frick Art Research Library’s Photoarchive webpage to access this innovative digital tool.

The Photoarchive and DAHL are grateful to the following interns for their contributions to the successful completion of the first phase of this project: Eileen Ogle; Liliana Morales; Ava Katz; and Paul Bendernagel, who designed and developed the digital map.

AI and the Digital Photoarchive

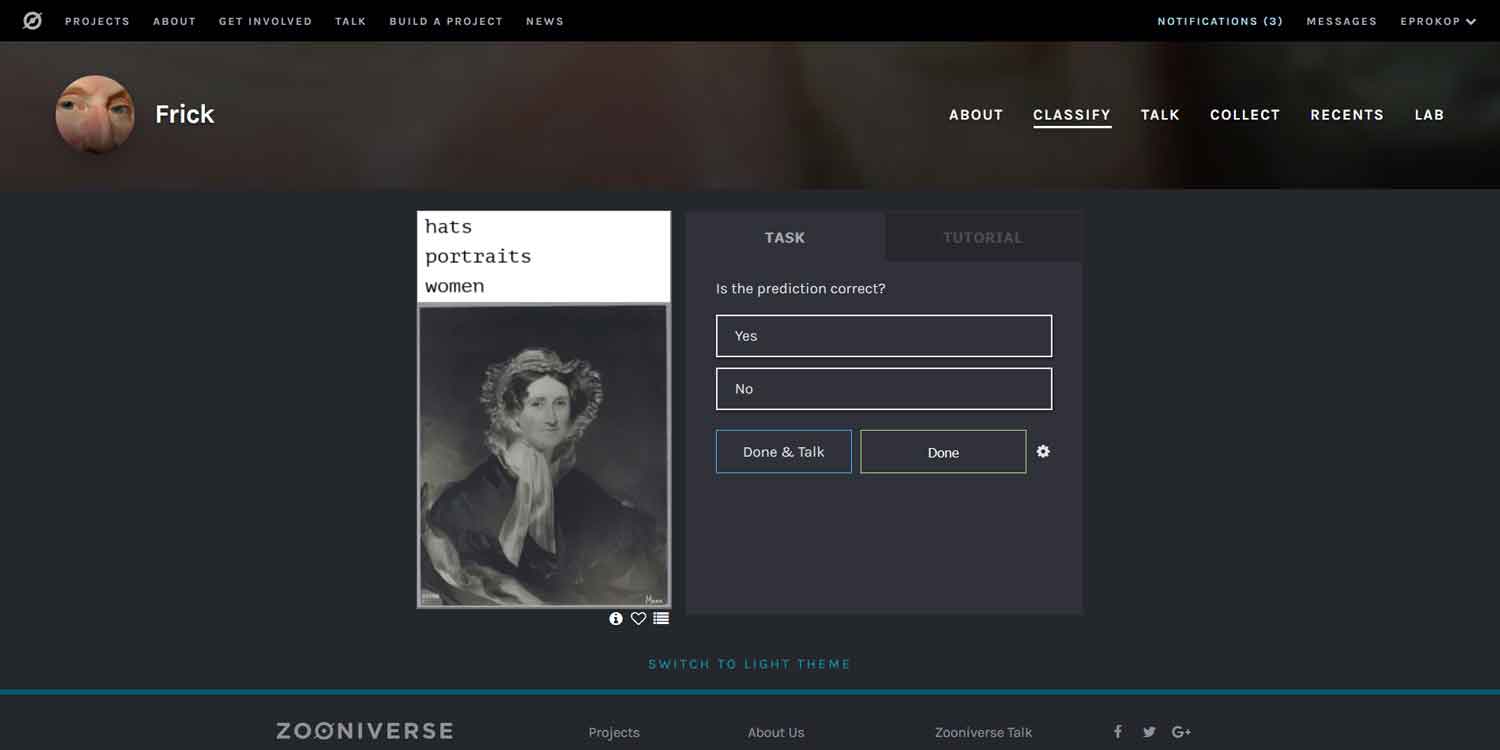

To maximize staff time and enhance the discoverability of the Photoarchive’s online collection accessible on Frick Digital Collections, the Photoarchive has partnered with Stanford and Cornell Universities on a pilot project to use Artificial Intelligence (AI) and machine learning to train the computer to apply local classification headings to these images. For this pilot project, the Stanford and Cornell team focused on a dataset of approximately 30,000 reproductions of American portraits and applied VGG—a popular deep neural network architecture—to develop automatic image classifiers. Automatic image classifiers have the potential to become powerful tools in metadata creation and image retrieval: preliminary experiments show promising results and future work involves expanding the results of the pilot project to the entirety of the Photoarchive collection. Our goal is that this project will inspire other cultural institutions to explore the opportunities afforded by applying AI to image collections as it promises to save archivists time and effort as well as provide researchers sifting through massive image libraries with necessary metadata.

To vet the preliminary results, the team developed an app so that Photoarchive and DAHL staff can efficiently identify accurate classification headings and correct erroneous or incomplete ones. This tool is in development and will soon be available on the Zooniverse platform so that the public can contribute to the success of this project.